萬(wàn)法歸宗之Hadoop編程無(wú)界限

來(lái)源:程序員人生 發(fā)布時(shí)間:2015-04-20 08:08:06 閱讀次數(shù):3490次

記錄下,散仙今天的工作和遇到的問(wèn)題和解決方案,俗語(yǔ)說(shuō),好記性不如爛筆頭,寫(xiě)出來(lái)文章,供大家參考,學(xué)習(xí)和點(diǎn)評(píng),進(jìn)步,才是王道 ,空話(huà)不多說(shuō),下面切入主題:

先介紹下需求:

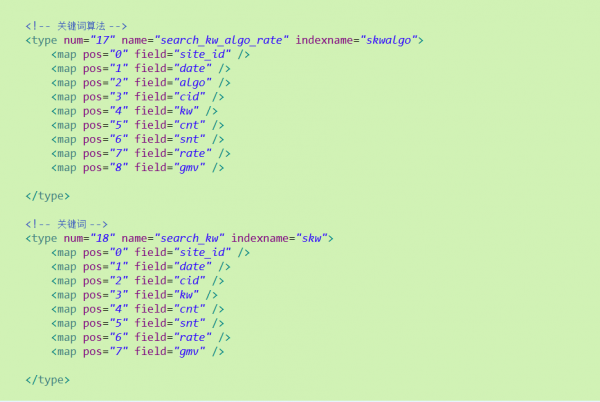

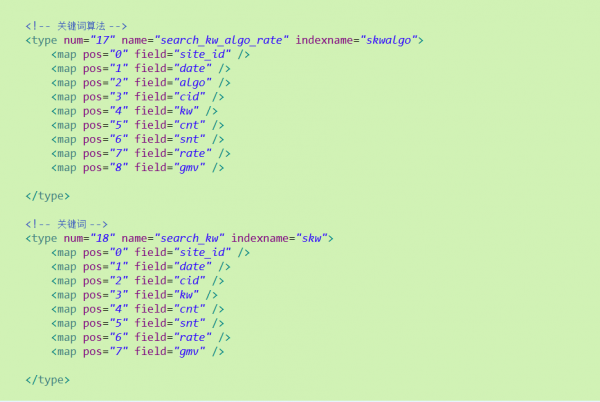

散仙要處理多個(gè)類(lèi)似表的txt數(shù)據(jù),固然只有值,列名甚么的全部在xml里配置了,然后加工這些每一個(gè)表的每行數(shù)據(jù),生成特定的格式基于A(yíng)SCII碼1和ASCII碼2作為分隔符的1行數(shù)據(jù),ASCII2作為字段名和字段值的分隔符,ASCII1作為字段和字段之間的分隔符,每解析1個(gè)txt文件時(shí),都要獲得文件名,然后與xml中的schema信息映照并找到對(duì)應(yīng)位置的值,它的列名,條件是,這些的txt的內(nèi)容位置,是固定的,然后我們知道它每行屬于哪一個(gè)表結(jié)構(gòu)的映照,由于這些映照信息是提早配置在xml中的,以下圖:

固然類(lèi)似這樣的結(jié)構(gòu)有20個(gè)左右的表文件,到時(shí)候,我們的數(shù)據(jù)方,會(huì)給我們提供這些txt文件,然后散仙需要加工成特定的格式,然后寫(xiě)入HDFS,由我們的索引系統(tǒng)使用MapReduce批量建索引使用。

本來(lái)想直接用java寫(xiě)個(gè)單機(jī)程序,串行處理,然后寫(xiě)入HDFS,后來(lái)1想假設(shè)數(shù)據(jù)量比較大,串行程序還得改成多線(xiàn)程并行履行,這樣改來(lái)改去,倒不如直接使用MapReduce來(lái)的方便

ok,說(shuō)干就干,測(cè)試環(huán)境已有1套CDH5.3的hadoop2.5集群,直接就在eclipse進(jìn)行開(kāi)發(fā)和MapReduce程序的調(diào)試,反正也好久也沒(méi)手寫(xiě)MapReduce了,前段時(shí)間,1直在用Apache

Pig分析數(shù)據(jù),這次處理的邏輯也不復(fù)雜,就再寫(xiě)下練練手 , CDH的集群在遠(yuǎn)程的服務(wù)器上,散仙本機(jī)的hadoop是Apache Hadoop2.2的版本,在使用eclipse進(jìn)行開(kāi)發(fā)時(shí),也沒(méi)來(lái)得及換版本,理論上最好各個(gè)版本,不同發(fā)行版,之間對(duì)應(yīng)起來(lái)開(kāi)發(fā)比較好,這樣1般不會(huì)存在兼容性問(wèn)題,但散仙這次就懶的換了,由于CDH5.x以后的版本,是基于社區(qū)版的Apache Hadoop2.2之上改造的,接口應(yīng)當(dāng)大部份都1致,固然這只是散仙料想的。

(1)首先,散仙要弄定的事,就是解析xml了,在程序啟動(dòng)之前需要把xml解析,加載到1個(gè)Map中,這樣在處理每種txt時(shí),會(huì)根據(jù)文件名來(lái)去Map中找到對(duì)應(yīng)的schma信息,解析xml,散仙直接使用的jsoup,具體為啥,請(qǐng)點(diǎn)擊散仙這篇

http://qindongliang.iteye.com/blog/2162519文章,在這期間遇到了1個(gè)比較蛋疼的問(wèn)題,簡(jiǎn)直是1個(gè)bug,最早散仙定義的xml是每一個(gè)表,1個(gè)table標(biāo)簽,然后它下面有各個(gè)property的映照定義,但是在用jsoup的cssQuery語(yǔ)法解析時(shí),發(fā)現(xiàn)總是解析不出來(lái)東西,依照之前的做法,是沒(méi)任何問(wèn)題的,這次簡(jiǎn)直是開(kāi)玩笑了,后來(lái)就是各種搜索,測(cè)試,最后才發(fā)現(xiàn),將table標(biāo)簽,換成其他的任何標(biāo)簽都無(wú)任何問(wèn)題,具體緣由,散仙還沒(méi)來(lái)得及細(xì)看jsoup的源碼,猜想table標(biāo)簽應(yīng)當(dāng)是1個(gè)關(guān)鍵詞甚么的標(biāo)簽,在解析時(shí)會(huì)和html的table沖突,所以在xml解析中失效了,花了接近2個(gè)小時(shí),求證,檢驗(yàn),終究弄定了這個(gè)小bug。

(2)弄定了這個(gè)問(wèn)題,散仙就開(kāi)始開(kāi)發(fā)調(diào)試MapReduce版的處理程序,這下面臨的又1個(gè)問(wèn)題,就是如何使用Jsoup解析寄存在HDFS上的xml文件,有過(guò)Hadoop編程經(jīng)驗(yàn)的人,應(yīng)當(dāng)都知道,HDFS是1套散布式的文件系統(tǒng),與我們本地的磁盤(pán)的存儲(chǔ)方式是不1樣的,比如你在正常的JAVA程序上解析在C:file .tx或在linux上/home/user/t.txt,所編寫(xiě)的程序在Hadoop上是沒(méi)法使用的,你得使用Hadoop提供的編程接口獲得它的文件信息,然后轉(zhuǎn)成字符串以后,再給jsoup解析。

(3)ok,第2個(gè)問(wèn)題弄定以后,你得編寫(xiě)你的MR程序,處理對(duì)應(yīng)的txt文本,而且保證不同的txt里面的數(shù)據(jù)格式,所獲得的scheaml是正確的,所以在map方法里,你要獲得固然處理文件的路徑,然后做相應(yīng)判斷,在做對(duì)應(yīng)處理。

(4)很好,第3個(gè)問(wèn)題弄定以后,你的MR的程序,基本編寫(xiě)的差不多了,下1步就改斟酌如何提交到Hadoop的集群上,來(lái)調(diào)試程序了,由于散仙是在Win上的eclipse開(kāi)發(fā)的,所以這1步可能遇到的問(wèn)題會(huì)很多,而且加上,hadoop的版本不1致與發(fā)行商也不1致,出問(wèn)題也純屬正常。

這里多寫(xiě)1點(diǎn),1般建議大家不要在win上調(diào)試hadoop程序,這里的坑非常多,如果可以,還是建議大家在linux上直接玩,下面說(shuō)下,散仙今天又踩的坑,關(guān)于在windows上調(diào)試eclipse開(kāi)發(fā), 運(yùn)行Yarn的MR程序,散仙之前也記錄了文章,感興趣者,可以點(diǎn)擊這個(gè)鏈接

http://qindongliang.iteye.com/blog/2078452地址。

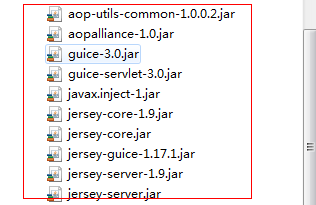

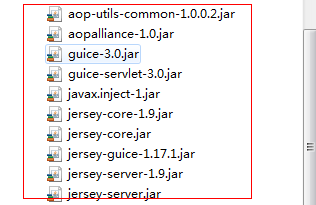

(5)提交前,是需要使用ant或maven或java自帶的導(dǎo)出工具,將項(xiàng)目打成1個(gè)jar包提交的,這1點(diǎn)大家需要注意下,最后測(cè)試得出,Apache的hadoop2.2編寫(xiě)的MR程序,是可以直接向CDH Hadoop2.5提交作業(yè)的,但是由于hadoop2.5中,使用google的guice作為了1個(gè)內(nèi)嵌的MVC輕量級(jí)的框架,所以在windows上打包提交時(shí),需要引入額外的guice的幾個(gè)包,截圖以下:

上面幾步弄定后,打包全部項(xiàng)目,然后運(yùn)行成功,進(jìn)程以下:

上面幾步弄定后,打包全部項(xiàng)目,然后運(yùn)行成功,進(jìn)程以下:

-

輸前途徑存在,已刪除!

-

2015-04-08 19:35:18,001 INFO [main] client.RMProxy (RMProxy.java:createRMProxy(56)) - Connecting to ResourceManager at /172.26.150.18:8032

-

2015-04-08 19:35:18,170 WARN [main] mapreduce.JobSubmitter (JobSubmitter.java:copyAndConfigureFiles(149)) - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

-

2015-04-08 19:35:21,156 INFO [main] input.FileInputFormat (FileInputFormat.java:listStatus(287)) - Total input paths to process : 2

-

2015-04-08 19:35:21,219 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:submitJobInternal(394)) - number of splits:2

-

2015-04-08 19:35:21,228 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - user.name is deprecated. Instead, use mapreduce.job.user.name

-

2015-04-08 19:35:21,228 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.jar is deprecated. Instead, use mapreduce.job.jar

-

2015-04-08 19:35:21,228 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - fs.default.name is deprecated. Instead, use fs.defaultFS

-

2015-04-08 19:35:21,229 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

-

2015-04-08 19:35:21,229 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.mapoutput.value.class is deprecated. Instead, use mapreduce.map.output.value.class

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.job.name is deprecated. Instead, use mapreduce.job.name

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

-

2015-04-08 19:35:21,230 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class

-

2015-04-08 19:35:21,231 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

-

2015-04-08 19:35:21,233 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.mapoutput.key.class is deprecated. Instead, use mapreduce.map.output.key.class

-

2015-04-08 19:35:21,233 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir

-

2015-04-08 19:35:21,331 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:printTokens(477)) - Submitting tokens for job: job_1419419533357_5012

-

2015-04-08 19:35:21,481 INFO [main] impl.YarnClientImpl (YarnClientImpl.java:submitApplication(174)) - Submitted application application_1419419533357_5012 to ResourceManager at /172.21.50.108:8032

-

2015-04-08 19:35:21,506 INFO [main] mapreduce.Job (Job.java:submit(1272)) - The url to track the job: http:

-

2015-04-08 19:35:21,506 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1317)) - Running job: job_1419419533357_5012

-

2015-04-08 19:35:33,777 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1338)) - Job job_1419419533357_5012 running in uber mode : false

-

2015-04-08 19:35:33,779 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1345)) - map 0% reduce 0%

-

2015-04-08 19:35:43,885 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1345)) - map 100% reduce 0%

-

2015-04-08 19:35:43,902 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1356)) - Job job_1419419533357_5012 completed successfully

-

2015-04-08 19:35:44,011 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1363)) - Counters: 27

-

File System Counters

-

FILE: Number of bytes read=0

-

FILE: Number of bytes written=166572

-

FILE: Number of read operations=0

-

FILE: Number of large read operations=0

-

FILE: Number of write operations=0

-

HDFS: Number of bytes read=47795

-

HDFS: Number of bytes written=594

-

HDFS: Number of read operations=12

-

HDFS: Number of large read operations=0

-

HDFS: Number of write operations=4

-

Job Counters

-

Launched map tasks=2

-

Data-local map tasks=2

-

Total time spent by all maps in occupied slots (ms)=9617

-

Total time spent by all reduces in occupied slots (ms)=0

-

Map-Reduce Framework

-

Map input records=11

-

Map output records=5

-

Input split bytes=252

-

Spilled Records=0

-

Failed Shuffles=0

-

Merged Map outputs=0

-

GC time elapsed (ms)=53

-

CPU time spent (ms)=2910

-

Physical memory (bytes) snapshot=327467008

-

Virtual memory (bytes) snapshot=1905754112

-

Total committed heap usage (bytes)=402653184

-

File Input Format Counters

-

Bytes Read=541

-

File Output Format Counters

-

Bytes Written=594

-

true

最后附上核心代碼,以作備忘:

(1)Map Only作業(yè)的代碼:

-

package com.dhgate.search.rate.convert;

-

-

import java.io.File;

-

import java.io.FileInputStream;

-

import java.io.FileNotFoundException;

-

import java.io.FilenameFilter;

-

import java.io.IOException;

-

import java.util.Map;

-

-

import org.apache.hadoop.conf.Configuration;

-

import org.apache.hadoop.fs.FileSystem;

-

import org.apache.hadoop.fs.Path;

-

import org.apache.hadoop.io.LongWritable;

-

import org.apache.hadoop.io.NullWritable;

-

import org.apache.hadoop.io.Text;

-

import org.apache.hadoop.mapreduce.Job;

-

import org.apache.hadoop.mapreduce.Mapper;

-

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

-

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

-

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

-

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

-

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

-

import org.slf4j.Logger;

-

import org.slf4j.LoggerFactory;

-

-

import com.dhgate.parse.xml.tools.HDFSParseXmlTools;

-

import com.sun.xml.bind.v2.schemagen.xmlschema.Import;

-

-

-

-

-

-

-

-

public class StoreConvert {

-

-

-

static Logger log=LoggerFactory.getLogger(StoreConvert.class);

-

-

-

生活不易,碼農(nóng)辛苦

如果您覺(jué)得本網(wǎng)站對(duì)您的學(xué)習(xí)有所幫助,可以手機(jī)掃描二維碼進(jìn)行捐贈(zèng)

------分隔線(xiàn)----------------------------

------分隔線(xiàn)----------------------------